Following the success of NESE Ceph storage and buy-ins, and seeing science needs for exascale storage, we began a process of investigating a possible NESE storage tier capable of growing to exascale capacity over the next few years.

Posted by Saul Youssef

Co-PI Professor Tiwari of Northeastern University led this work. He and his team investigated technologies including traditional spinning disk drives, optical disk drives, magnetic tape, SSD and even exotic technologies like DNA storage. Figure 5 illustrates the historical and expected trends of bit density in hard drives compared with magnetic tape. The essential point is that the bit density of tape is expected to continue to improve in Moore’s law fashion for many years with many doublings, while HDD density increases are much more difficult technologically. Because of this, it’s generally expected that the per terabyte cost advantage for tape compared to HDD will continue to increase indefinitely. The current densest tape media are the IBM enterprise 20 TB cartridges. It is encouraging to learn that more than 200 TB cartridges have been demonstrated in lab settings for several years already, consistent with the expected density ramp up over the upcoming years with 400 TB tape cartridges anticipated in 2028.

With consideration of total cost of ownership tape is strongly favored over hard disks – by approximately a factor of six as shown in Figure 6. In our shared facility case, where the equipment and media costs are shared across a consortium, the situation becomes even more favorable for tape as will be clear below.

Surveying industry and academic sites only encouraged our conclusions, particularly since commercial cloud providers are now heavily invested in magnetic tape libraries and expect to continue in this fashion indefinitely. Tape libraries also have the attractive feature that one can independently increase storage and I/O capacity by independently adding media and tape drives and disk caching storage. Since research projects staging petabytes of storage almost inevitably care about total throughput and not latency, we have an opportunity to make a system that has the best of all worlds; achieving both low cost per Terabyte simultaneously with high-bandwidth from multiple 100 Gbps networks throughput MGHPCC data center floor.

Although the basic technological arguments for tape were compelling, there remained three major potential problem areas which we needed to understand before NESE as a collaboration could take on building and operating storage of this type.

NESE and our collaborating institutional teams have no experience operating large scale tape libraries and associated front end disk cache systems.

Many large scale tape systems are used for relatively cold storage. Since we intend to use the system for high performance 24/7 staging data in and out as well as for long term cold archival storage, we had to find a solution which is capable of both.

The tape media and tape drive markets are near monopolies and it is not immediately obvious that we will be able to get sufficiently good pricing.

In order to resolve these issues, we spent quite a bit of effort in the past year learning from the tape library experiences at established facilities, and going through detailed proposals, configurations and pricing with all three of the major tape library manufacturers. As part of this process, we did a series of extensive contacts with the following organizations, in person before the pandemic, and by video after. The NESE collaborators attending these meetings included John Goodhue (MGHPCC and MIT), Devesh Tiwari (NEU), Scott Yockel (Harvard) and Saul Youssef (BU). From these meetings, we learned the pitfalls of operating such tape systems and the strengths and weaknesses of the major tape vendors. In particular from the use of such systems at Large Hadron Collider Tier 1 centers, we were able to conclude that these systems can indeed be successfully operated with full I/O throughput essentially 24/7 indefinitely.

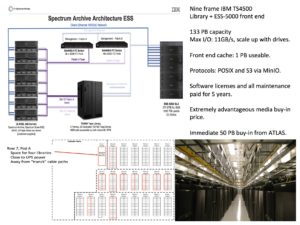

Armed with our learned experience, we wrote a request for proposal and received an extensive series of briefings, proposals, configurations and quotes from all three major tape library manufacturers: IBM, Quantum Inc. and Spectra Logic. In mid 2020, we reached final quotes and made the decision to go with the IBM TS4500 solution shown in the figure below. The initial nine frame library uses Enterprise IBM media storing 20 TB per cartridge and 20 PB per full storage frame. When full, the initial library has a total capacity of 133 PB, with maximum I/O 11 GB/s fronted by a 1 PB Spectrum Scale/Spectrum Archive file system and twelve fibre channel connected to six NSD servers (Lenovo SR630 2x Intel 8268 48c, 384 GB RAM, 2x 100 GbE, 2x 32 Gb fibre channel). The overall file system presents both as a POSIX file system and as S3 via MinIO running on each NSD server. The figure below also shows a pod reserved on the MGHPCC floor for the new system, arranged by co-PI John Goodhue, the MGHPCC Executive Director. In addition to the long term MGHPCC institutional commitment, this step means that we have physical space and power for a second full system of the same size. Because of the prominence and growth potential of the system, vendor interest in our site was very high and we were able to get excellent pricing, particularly a very low media cost guaranteed for two years. This means that we can add one new similarly sized tape library, typically once every two years, in order to move up with the expected increasing tape densities.

In accordance with the overall NESE strategy, we only proceeded with the project once we had a solid commitment from an experienced operations team. In this case, the launch team and long term commitment is a joint effort led by Scott Yockel of Harvard FASRC (25 FTE), in collaboration with Wayne Gilmore’s Boston University Research Computing Team (17 FTE). The Harvard FASRC team has already taken on NESE Ceph for the long term and is already working closely with the BU Research Computing group on the related NERC project9. The BU team has many years of experience with IBM Spectrum Scale software and is excellently positioned to operate the NESE Tape front end.

As of this writing, the systems in the above figure have all been ordered, have mostly arrived at MGHPCC, and site preparation is ongoing for installation before the end of this calendar year. Thus, even with relatively modest funding from our DIBBS grant, we have been able to launch a new major shared storage service at MGHPCC with great potential for growth, sustainability, scientific impact, and potential to expand to exascale capacity over the next few years. Within the past few weeks, a proposal has been made to the management of the U.S. ATLAS project for a buy-in to the new NESE Tape system. The proposal was approved, funding was obtained via NET2, and 50 PB in tape media has been purchased for an initial ATLAS storage deployment in connection with NET2. These developments have aroused the interest of our MIT colleagues on the CMS experiment (Professor Christoph Paus and his colleagues at MIT) and we have begun working on joint ATLAS/CMS storage in NESE Tape.