Massive data flows for NESE Exascale, where moving 1 PB per day should be the norm, require careful attention to be taken with regards to the network.

Posted by Saul Youssef

This has caused us to create a plan to scale out the network to begin to meet this need now and with ease when expansion is needed at a later time. Within MGHPCC there are a number of projects that are all blossoming at the same time and coming to a critical point of cross-pollination. These projects all need high-bandwidth access to NESE Ceph and NESE Tape and include the New England Research Cloud (NERC), Mass Open Cloud (MOC), Open Cloud Testbed (OCT), Northeast Tier 2 (NET2), FABRIC, Open Storage Network (OSN). In addition to internal bandwidth between projects, external wide-area network bandwidth will become increasingly important.

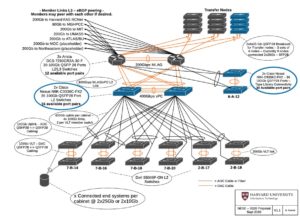

In the proposed future NESE network NESE Ceph will get a new pair of Cisco Nexus N9K switches for back-end traffic, and NESE Tape will also have a pair of Cisco Nexus N9K switches added. The existing Arista switch will be transitioned to only be used for a peering switch between projects and institutions core switches. As well, this allows for more Data Transfer Nodes (DTNs) to be added (in blue) and 12 additional open ports.

describes the future network diagram needed to support the NESE tape deployment and additional projects. In this figure, every device (network switches, data transfer nodes, and storage) in grey is the existing infrastructure. In the current setup, we have run out of switch port capacity to support all of the new projects and NESE Tape installation. That is because the current use of the Arista switches handle both as the NESE backend Ceph (east-west) traffic, as well, all of the uplinks to 9 RGW/DTN servers and 7 Institutional core network routers. Getting data to/from the Internet under the current model, data must traverse the Harvard firewall, whereby Internet2 traffic goes directly out to the Northern Crossroad (NOX) local ISP at 100 Gbps, but public Internet has to route back to Harvard Campus gateway over a 3×10 Gbs connection.

Because NESE and the collaboration between projects within MGHPCC has grown so much, this network model is basically at capacity and will not allow for future expansion. Therefore, we have decided to take a two phased approach to meet the near-term expansion goals for NESE Tape and long-term we will tackle creating direct Internet access for the NESE project with it’s own core router, firewall and independent Internet feed. In Phase 1, we have added a pair of Cisco Nexus (N9K-C9336C-FX2) switches that replace Arista switches to take care of the backend Ceph (east-west) traffic. This allows for 16 more top-of-rack network switches (16 racks of Ceph storage). The Arista switch becomes the peering switch between projects, thereby allowing NESE Tape full connectivity and leaves 12 pairs of ports open for future projects. In addition, a pair of Cisco Nexus (N9K-C9336C-FX2) switches have been added to support NESE Tape traffic, and also allows for 30 more storage servers to be added to the IBM SpectrumScale disk cache in front of IBM Tape Library. This allows for full bi-directional bandwidth between NESE Ceph and Tape, which is beneficial for a researcher that wants to “check-out” an older dataset from NESE Tape, to deposit in NESE Ceph and connect to cloud resources from NERC, MOC, OCT and the like.